|

|

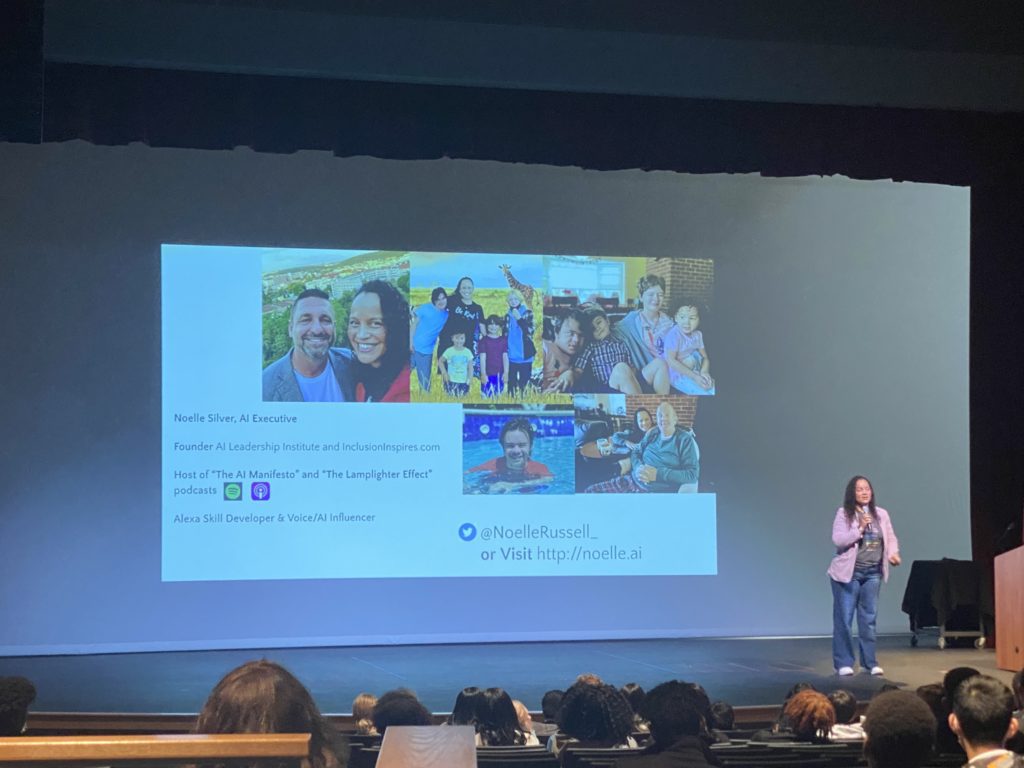

Noelle Silver Russell snapped to attention when she saw the email hit her inbox. Jeff Bezos, CEO of Amazon, had sent a company-wide call for folks passionate about artificial intelligence (AI) who were willing to work on a new project.

Russell, at the time a cloud architect at Amazon, said the company had just come off one of the largest failures in its history: the Fire Phone. People were hesitant to volunteer for a new project. Her manager even discouraged her joining.

But Russell had a 12-year-old son with Down syndrome, so she knew some positive applications of artificial intelligence. Her father, who had suffered injuries in a bad car accident, had benefitted from AI, too.

“So that was my lens, right?” said Russell, an AI ethicist who now works at IBM. “When I got this email from Jeff Bezos, my lens was like, ‘Oh my gosh … this could be cool!'”

Russell joined the team and, in the first year, built over 100 applications for the new project: Amazon’s Alexa.

SparkNC connects students to high-tech fields

Russell spoke this fall at the 2022 Ethics and Leadership Conference, organized by SparkNC and hosted by the North Carolina School of Science and Mathematics (NCSSM). The conference, titled “Should We Hack Humanity,” focused on how AI can be used ethically and in ways to help students inside and outside of the classroom.

SparkNC launched in June as an initiative of The Innovation Project to promote careers in high-tech fields. Funded by the General Assembly and pandemic relief dollars in 2021, it is a cooperative with several school districts and focuses on advancing STEM pathways — particularly for students underrepresented in these fields, namely students of color and girls.

At the conference, Russell highlighted a quote often attributed to Ralph Waldo Emerson:

Bessie Anderson Stanley

The quote was actually written by an American writer, Bessie Anderson Stanley, she said. Society often overlooks women, and in high-tech fields especially — that was Russell’s point. She holds fast to concepts of idea lineage and credit. That’s one thing that makes data and AI interesting to her.

“You get to preserve the lineage of who owns the data,” she said, “and that is one of my biggest ethical responsibilities as a data scientist.”

Russell said some of the key principles she’s learned in her career are inclusive engineering, to encourage inviting all types of people into rooms where decisions are made; multimodal development, to ensure maximum accessibility and improved productivity; and the ethics that drives the choices of data scientists and others in the tech field.

Diversity, justice, and AI

The conference included multiple breakout sessions along two tracks — one for students and another for adults. Student attendees first heard experiences about engineering and AI. Later, students broke into small groups to engage with the conference’s speakers. Throughout the day, students asked questions about diversity and intersectionality in AI, user experience and satisfaction, data privacy and regulation, and the excitement and concerns of future technology.

The adult attendees first heard from NCSSM’s Joe LoBuglio and Charlotte Dungan, the chief operating officer of The AI Education Project, who talked about existing efforts to integrate ethical AI into K-12 classrooms. Later, these attendees heard from Richard Boyd, CEO of Ultisim and Tanjo Inc., who spoke about how responsible use of AI could transform K-12 education.

Attendees also got to hear Russell interview Renée Cummings, an AI ethicist and data science professor at the University of Virginia. The pair talked about crime prevention, privacy, and justice in AI.

“One of the things that I have tried to do since I entered the world of AI and data science is to ensure that we bring a justice-oriented and a trauma-informed approach to understanding the ways in which we use data,” Cummings said.

She talked about surveillance in society — from monitoring cell phone usage to using methods to ensure public safety. AI can have positive and negative uses, Cummings said, so we need to “better understand the power of technology and the power technology could have on your life.”

Responsibility and AI

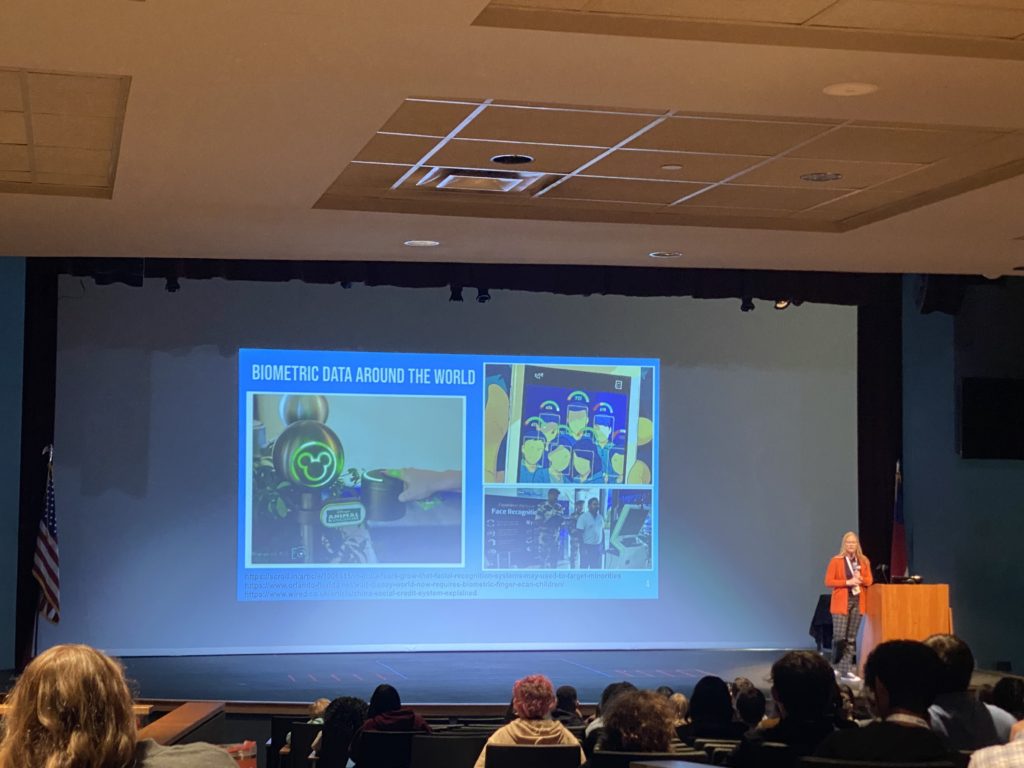

Dungan, who co-facilitates the designing of responsible AI curricula at the National Humanities Center, talked about biometric data and its uses — specifically how corporations and government use our DNA.

Biometric data can be used positively, Dungan said, as with AI in doctor’s offices that efficiently and effectively help patients or find cures to devastating diseases. Biometric data can also be used negatively, she said, highlighting how corporations can sell or use our data in ways people did not intend.

As she made remarks concluding the conference, Russell summarized the issue.

“I am a little bit worried today about companies that aren’t even having these ethical conversations,” she said. “They don’t realize the impact that their technology will have on humanity, and that’s why these discussions in this room are so important.”

Editor’s Note: A previous version of this article misnomered Noelle Silver Russell. It has been corrected